Video compression is a huge part of our technology. We are able to take advantage of enormous investments in the standardized formats and hardware for encoding and decoding video for our low latency game streaming. We get a lot of questions about why an image may have some blur at moments or why the reds aren’t as crisp as they are when someone sits in front of his gaming PC. To explain some of these dynamics, we thought it would be valuable to give a brief introduction to video compression and build up the general understanding of the technologies at play. The history of compression, why there was a push to switch from analogue to digital video, and the impacts of decisions to lower bitrates explain where the trade-offs are for low latency, compressed video over the internet.

Early Compression

VHS and analogue TV broadcast

Analogue video is stored and transmitted as waveforms representing luminance (brightness) and chroma (color) for each given scan line (how CRT’s draw an image). This is how analogue broadcast and VHS systems work, they create a signal that is several waveforms overlayed on top of each other, meaning that you can pack all luminance and color information into a single stream. This method does have many downsides though.

Because signals are not physically separated and are part of the same stream, there is a large chance of crosstalk — this is easy to see when plugging your old game console into a LCD TV, many of the colors blend or have weird edge effects.

Because you’re locked to a specific transmission rate (that must match the CRT) you are resolution and clarity limited. This would lead to interlaced signal being developed, which means that for each frame, only half of the vertical lines are presented. This is very effective in static scenes, and has some benefit in high motion scenes because you can essentially double the frame rate for the same transmission rate. Unfortunately, in motion it gives quite poor results on a fixed resolution device like an LCD, but on a CRT it was unnoticeable.

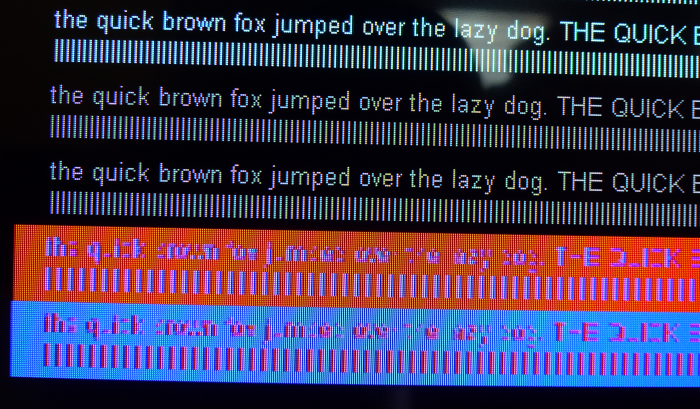

Interlacing clearly visible on an LCD panel

Clearing The Air

Analogue inefficiencies

Outside of reception and noise issues, analogue broadcast and VHS looked pretty good on CRT TV’s, but analogue signals are uncompressed.

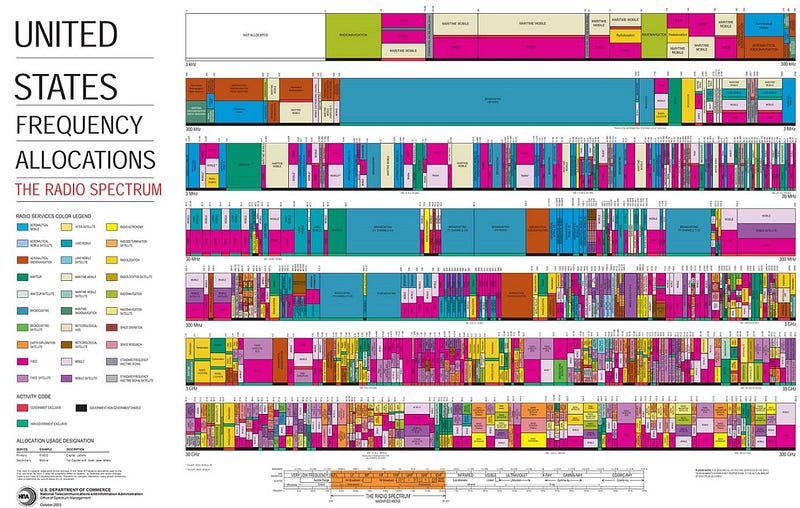

Governments were looking to free up space in the frequency spectrum for emerging technologies, but with each standard definition broadcast channel using up one 8Mhz band (in the PAL broadcast standard) this was incredibly inefficient. Practical frequencies for over the air technologies are quite scarce, and inefficient use of these bands is wasteful, so there was a need to look at more efficient methods of delivering video by broadcast. Freeing up these frequencies would allow for things like LTE. Digital video broadcast was the answer to this question, which utilized the MPEG-2 (and later revisions which would use MPEG-4) meaning that you could take an analogue 8 Mhz band, and squeeze approximately 9 standard definition digital channels into the same space.

A graphic that displays the currently allocated radio spectrum in the United States

The Digital Video Revolution

Consumer demand for better image quality and an industry demand for more efficiency meant a push to digital.

In the 90s, consumer demand was increasing for higher quality television and movie experiences in the home. In addition, the computer revolution meant that consumers were accessing media on more devices than ever before. Digital audio in the form of CD’s had taken the world by storm with their improved audio quality over tape, their longer playback time than vinyl, and their high capacity for computer storage.

In 1993, standards body Moving Picture Experts Group (MPEG) released the MPEG-1 standard. The goal of this standard was to compress a video and audio signal into a bandwidth of up to around 1.5mbps (an approximation of VHS quality). This standard relied on several ideas, such as key frames, and prediction frames and color compression and image compression.

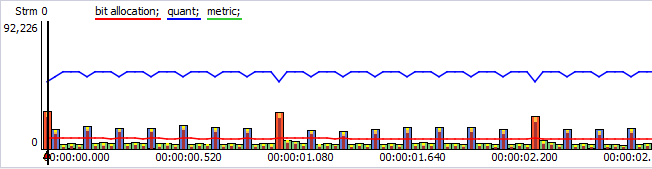

An example if I, P and B frames in HEVC video

Key frames and prediction allowed a video file to not necessarily store/transmit 60 frames of video for 1 second of 60FPS video. Instead, what a MPEG encoder could do, is plot key frames regularly through the video (which are larger files that are encoded directly from the source) and between these, make prediction calculations to fill in the gaps.

To aid image compression and make predictions less obvious, the image is split up into thousands of segments (the highest-level segment is a CTU). These segments align to a grid, but can be subdivided depending on the detail within the segment.

Example of subdivisions inside of a HEVC video

It also introduced a new way to encode color which would give further reduction in data requirements.

RGB VS YCbCr

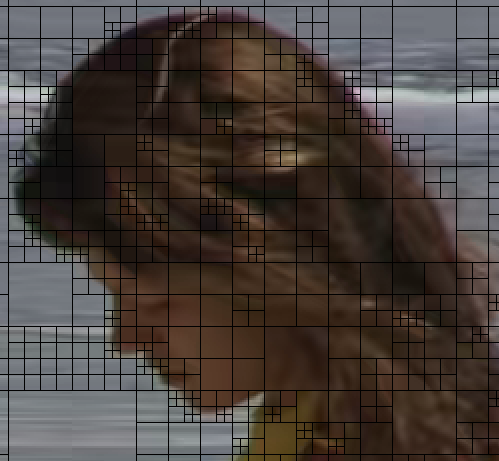

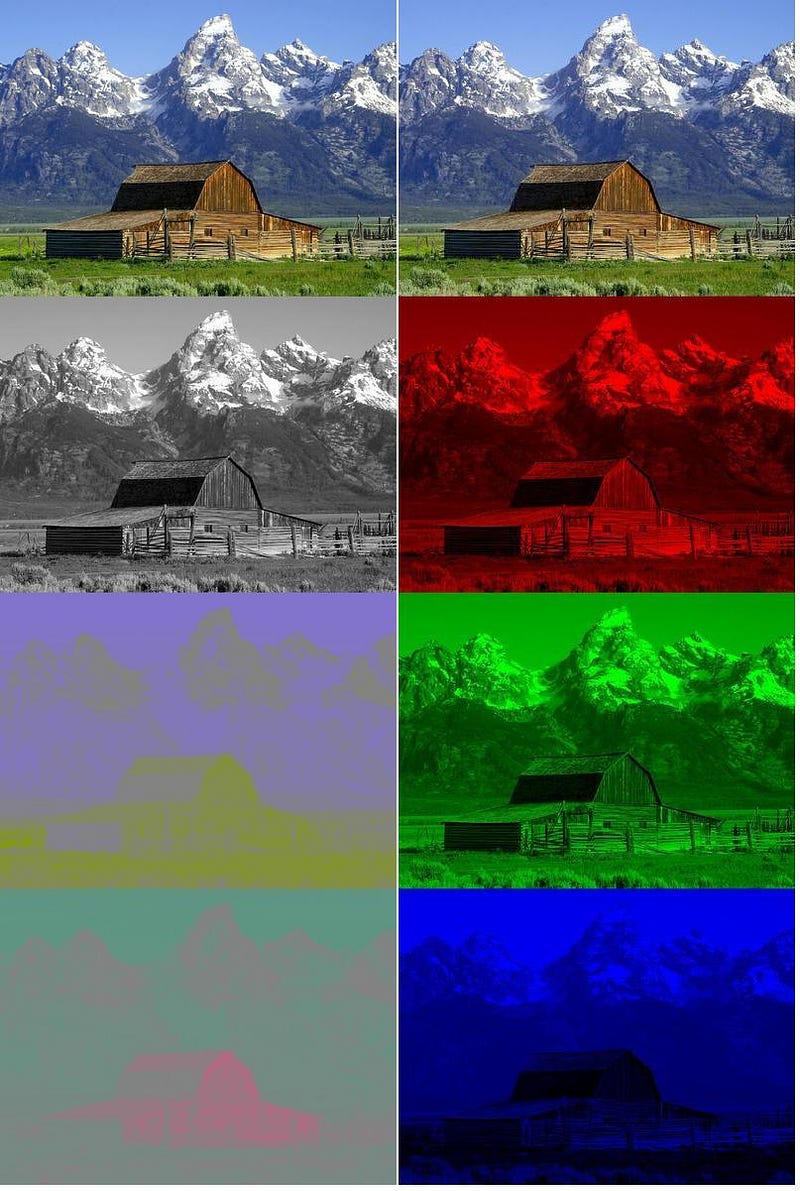

If you’re familiar with RGB, you will know that almost all modern display technologies are made up of a pixel grid of Red, Green and Blue pixels (this grid is called subpixels, with all contributing to the final pixel color displayed). RGB is an additive color, meaning that Red, Green and Blue all have a luminance value built in, and a combination of different variations of Red, Green, Blue at different brightness will produce different colors.

The RGB format is a fantastic way to transmit data that will be displayed on an RGB panel, as it involves no conversion process or upscaling. The only downside is that RGB is uncompressed, a 1920×1080 @ 60hz signal stream is approximately 2.9Gbps (excluding alpha/transparency channel) which is far too high for even modern fibre internet connections.

Many years ago, it was discovered that the human eye was more sensitive to luminosity than color, thus broadcast and VHS displayed much higher luminosity resolution than color resolution, for only a “small loss” in perceptible image quality.

This idea lead to the creation of YCbCr, which was an equivalent to the RGB format, but allowed the separation of Luminosity (Y), Blue (Cb) and Red (Cr). Notice there is no Green channel there? Well, YCbCr is a subtractive color format, where subtracting Red or Blue from the luminosity channel would produce the Green channel. There is also no subpixel data information included, only final color (important later). This is one reason that if you’re getting no video data via Parsec, your entire screen will be green.

Right: YCbCr channels, Left: RGB channels

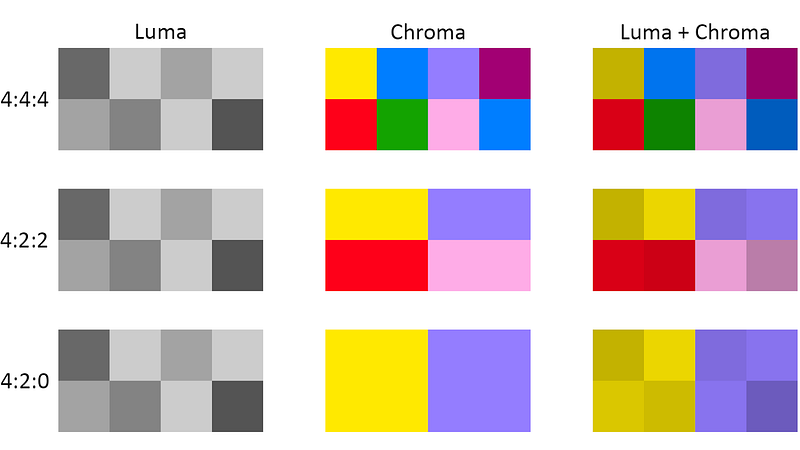

YCbCr as a format is presented in 3 main sub formats 4:4:4, 4:2:2 and 4:2:0.

4:4:4 is full luminance and color, 4:2:2 halves the color data, and 4:2:0 quarters it

As you can see here, luminance detail is always retained, as this is the easiest way to retain perceived resolution in the final image.

4:2:0 has been accepted as the standard format for consumer video (where motion and lack of pixel perfection mask the lack of color definition) and is used in all DVD’s, Blu-Ray and Ultra HD Blu-Ray movies, as well as online video streaming — it provides good compression while retaining acceptable definition.

The place where 4:2:0 falls apart however, is sources that use RGB subpixel data, or require fine color detail. Computer user interfaces (especially text) heavily rely on rendering techniques that involve an uncompressed RGB. When that is not provided, it becomes immediately obvious.

See this great demonstration from a user JEGX at Geeks3D, who posted how his 4K TV was only capable of displaying 4:2:0 over HDMI, resulting in very low color resolution for the red and blue text (notice how the black and white text remained completely readable as luminosity is never compressed).

Bit Depth

There are levels to this

Not only can color and luminosity be divided into different channels and compressed separately, but a choice can be also made as to how many levels of luminosity and color there are between complete black, and complete white. Image showing how high bit depth improves the smoothness of the gradient between black and white

Image showing how high bit depth improves the smoothness of the gradient between black and white

Most modern computers will produce 256 levels of color (8-bits) for each RGB channel, as well as 256 levels of transparency, for a total display depth of 32-bits — with this being converted to a final 24-bit signal and sent to the display. Most consumer monitors will display your image at either 6-bits per pixel or 8-bits per pixel, so display technology and video has been targeted at 8-bits, as any additional information would be wasteful. A 24-bit image displayed on an 8-bit panel can produce up to a total of 16.7 million color combinations.

More recently, a push for high bit depth video as part of HEVC and Ultra HD Blu Ray HDR 10 has been adopted. HDR 10 (10 bit) and Dolby Vision (12 bit) greatly increase the number of colors available, and increase the file size.

MPEG-1 to HEVC

MPEG is a standards body that decides specifications for new video formats. It’s a group composed of many different leading technology companies, who all pay a fee to be a member, and contribute their ideas to standards.

The first standard was the MPEG-1 standard, and, as discussed above, the goal for this standard was to create a video file that looked as good as a VHS. It incorporated chroma subsampling, keyframes, predicted frames, and a very clever technique to only include delta changes from the keyframe in subsequent frames.

This image demonstrates how delta changes would be calculated

This image demonstrates how delta changes would be calculated

MPEG-1

MPEG-1 made its way into a few products, primarily old satellite/cable TV boxes. The biggest revolution that came with MPEG-1 was the MP3, which was the audio layer of MPEG-1.

MPEG-2

MPEG-2 came two years later, in 1995. It included support for interlaced video, and split the standard into two container formats. Transport Steam, and Program Stream. Program stream was for videos that were basically seen by the decoder as a fixed length file that would be stored on a hard drive. Transport Streams were seen to the decoder as a video without an end, which made them suitable for broadcast. MPEG-2 was very successful. It became the format of the widely popular DVD, and became the mainstream digital broadcast format.

MPEG-4/AVC

MPEG-4/AVC was finalized in 2003 to address the need for smaller video file sizes, suitable for transfer over the internet. MPEG-4/AVC is based on the very well-known H.264 video codec. It used advancements on the technologies inside of MPEG-1/2 to allow even higher compression (at the compromise of image quality). It also allowed higher image quality at high bit rates, by allowing 4:4:4 and 4:2:2 chroma compression, as well as high bit depth (known as deep color on Blu-Ray). Blu-Ray employed H.264 as the primary format for movies.

H.264

H.264 saw mainstream adoption in computer systems due to Youtube, Netflix and other mainstream video services available on the internet and the prominence of consumers wanting to create, watch and store video on their computers. Hardware decoders and encoders were created to allow power efficient and fast methods for viewing and creating H.264 content. As of 2018, H.264 is still the most popular video format in the world, and is used by Parsec!

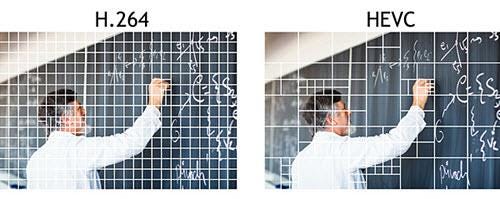

HEVC / H.265

HEVC is a further advancement of H.264 technologies, with the specification ratified in 2013. The standard aims to “half” bit rate for the same image quality as H.264. It achieves this by allowing more flexibility to the video grid size, and requiring far higher computation when encoding and decoding the video.

HEVC also includes support for the Main 10 HDR profile (10bit video) as well as REC-2020 color format, which significantly expands the number of colors able to be displayed in the video.

Netflix and Prime video both employ HEVC to enable 4K HDR support for streaming video. We are investigating HEVC for Parsec because of the lower bitrates required, but have found it increases latency in a lot of consumer hardware.